Meta Is Right to Fire the Fact-Checkers

The fight against 'misinformation' is plagued by error and liberal bias. It's not an appropriate pursuit for a neutral platform.

Dear readers,

Facebook is standing down in its efforts to use fact-checking to suppress “misinformation,” dropping its partnerships with third-party fact-checking organizations and turning to a user-driven “community notes” model similar to the one on X. This was inevitable — a top-down infrastructure to stop false ideas from spreading proved ineffective on several dimensions. Content moderation is a human project, and the fact-checkers (on whom the content moderators have relied to decide what’s true) invariably bring their preferences and biases to the fact-check process, and those biases have overwhelmingly gone leftward. Instead of helping a lot of people see the light (or whatever), this has led much of the population to view moderation efforts with appropriate hostility. Of course, it didn’t help that Facebook was also suppressing a wide variety of ideological views and unpleasant opinions, a practice it will also wind down.

As Reed Albergotti writes for Semafor, Facebook’s approach to moderation was a “failed experiment,” and now it’s over.

Of course, the anti-misinformation advocates are losing their shit; Casey Newton writes Meta “has all but declared open season on immigrants, transgender people and whatever other targets that Trump and his allies find useful in their fascist project.” Often, advocates of strong-handed moderation don't seem to know what hit them; ironically, that bewilderment arises from their own entrapment in a filter bubble. They see that they face political opposition. But when you operate in a bubble where all information is filtered by someone who thinks like you do, you’re unlikely to understand exactly why your opponents oppose you. In this instance, anti-misinformation advocates are steeped in years of news coverage and discussion of the issue that takes “misinformation experts” seriously as the exponents of a scientific and objectively correct method for controlling information — and treats opponents of the old moderation regime as people who are misinformed about misinformation and how it should be handled.

To see how one might end up in this filter bubble, check out the New York Times coverage from 2022 of the political fighting over the briefly-existing Disinformation Governance Board at the Department of Homeland Security. The coverage — written by the paper’s beat reporter on misinformation, who like all other “misinformation reporters” is totally in the tank for a specific liberal view of how misinformation should be defined and managed — took for granted that the Board was an institution that would protect free speech; people who posited the Board would be used to suppress speech were themselves spreaders of misinformation. How did we know the board would protect speech? Well, its internal documents said so — and the board, staffed by experts, was the expert authority on its own benign nature. During this period, the federal government had been pressuring platforms like Twitter and Facebook to suppress certain disfavored speech about topics including the COVID pandemic. The idea that one might have an authentic, not-based-in-misinformation reason not to trust the “misinformation experts” doesn’t really rate in the coverage — the only objection given credence in the Times coverage, because it was raised by liberal groups, is that the board might be misused in the future if it ever were to come under the control of Republicans.

This error of putting so much stock in “the experts” is particularly galling because the misinformation “experts” are, by and large, a ridiculous cadre of people operating in a fake domain of expertise.

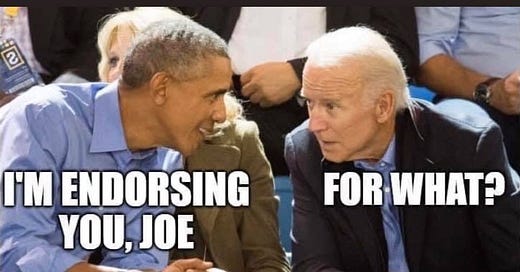

Consider, for example, Sander van der Linden, a professor at Cambridge University. He is considered a leading expert on misinformation and is the author of an acclaimed book about how to fight it, Foolproof. And he is really, really adamant that the meme below constitutes “misinformation” and was correctly classified as such in a 2023 paper:

The meme, of course, is a joke, and the political message that underlies it cannot be false because it is an opinion and an analysis, not a factual claim.

Van der Linden says it was nonetheless misinformation and classified correctly as such in an academic paper. “The study classified this image as political misinformation because it plays into the well-documented false narrative that Biden is senile (notably fact-checked as false),” he wrote last December. Ah, there we go — Biden’s senility was “notably fact-checked as false,” and therefore you were misinforming people if you joke about him being confused and clueless. Furthermore, van der Linden also said the meme was election misinformation because it implied that Biden would not seek re-election — also not a factual claim but a prediction, and one that turned out to be true.

Even after Biden’s disastrous debate in June 2024, van der Linden continued to defend his view that the meme had been misinformation. After all, “experts” had declared that Biden was not senile.1 This led political scientist Christian Pieter Hoffman to note that, for quite a while, journalists and ordinary people had been watching Biden’s performances and had come to suspect that he was losing a step. “Are you saying citizens or journalists should have to wait for experts (which?) to confirm his infirmity before they can address this issue?” he asked.

“Of course,” van der Linden replied. “We can't just be saying random stuff without expert assessment, especially on medical issues.”

Van der Linden, like many of those who seek to fight misinformation, relies on the supposedly objective body of work that comes from fact-checkers. Unfortunately, the PolitiFact fact-check that he relied on to contend the meme was misinformation both isn’t very good and doesn’t say what van der Linden claims it does — the fact-check, written when Biden was several years younger, made the narrow contention that it was false to say there’s no doubt Biden is senile, if you define “senile” as specifically meaning that he has dementia. Fox News political analyst Brit Hume, who made the offending “senile” statement, had an obvious rejoinder: “senile” is a colloquial term that doesn’t necessarily imply a specific medical diagnosis. As such, his statement was an opinion and really shouldn’t have been fact-checked at all.

One of the big problems with fact-checkers has been their frequent inability to even understand what a fact is. In 2023, New York Times reporter Michael Shear fact-checked Chris Christie's accusation that “Joe Biden hides in his basement,” declaring “this is false.” Of course, Christie's basement comment was a metaphor, and the underlying metaphorical claim — that Biden was limiting his schedule of public events because of his diminished capacity — was an inference that Christie drew from facts he observed about Biden's schedule and behavior. The Times declared the statement false on the grounds that Biden does public events — “Mr. Biden travels frequently around the country and around the world” — even though Christie didn’t say Biden always hides in his basement. Later reporting (particularly from the Wall Street Journal) made clear that Biden’s team really was reducing the extent of his schedule to accommodate his limitations that have come with age. Christie inferred correctly — much more correctly than I did at the time — and the Times should not have expressed such certainty about the issue.

The anti-misinformation apparatus is almost always misused in the same direction: in favor of liberal ideas and arguments. The two examples I discuss above both served the same purpose: to protect President Biden from well-warranted scrutiny of his mental acuity. But we also saw this abuse with the Hunter Biden laptop story (preemptively deemed to be misinformation by various social media platforms), with the COVID lab-leak theory (declared “debunked” by the Washington Post, which later corrected itself) and countless other stories.

Despite the garment-rending over a new regime that tries less hard to force people to say the right things, I think the timing of Meta’s change and the broader “vibe shift” on misinformation is actually good for the left, for a couple of reasons. One is that as conservative ideas become ascendant within institutions like Meta, the left may draw protection from a regime that gives up on the effort to enforce approved ideas from on high. But an even bigger reason is that fact-checkers embody some of the most annoying personality traits that prevail among liberals. Nosy, humorless, and always convinced they know better than you, they are HR-like figures in a society that has had quite enough of HR for now. Maybe the first step for liberals to be likable again is to let go of the idea that they can make everyone believe the right things, if only they are equipped with the right moderation tools.

Very seriously,

Josh

One expert PolitiFact quoted actually gave unintentional backing to Hume’s take — geriatrician Donald Jurivich told the outlet “senile” is “a pejorative descriptor” that “reflects unmitigated ageism.” While Jurivich offered this as a criticism of Hume, his statement also reflects a view that Hume’s comment was an insult — not a claim that can be adjudicated “true” or “false.”

This article conflates fact checking with a new content moderation policy that explicitly permits users to post various slurs directed at minority groups (which is what Casey Newton is referencing, I believe). If you like that kind of thing, then sure, but there’s a reason Gab, Parler, and Truth Social aren’t wildly popular.

Further, Josh is ignoring the societal misery promoted by grifters at scale on Meta, which the world is vastly better off without, and it’s a shame that they’re probably going to allow it again. Do we need more QAnon? Was it good when the “Plandemic” video went massively viral on Facebook? Is it only fair that Alex Jones gets more space to organize vicious mobs to harass Sandy Hook parents? MAGA conservatives attacked all of the moderation choices to delete these accounts or cut back on their reach as anti-right wing at the time. Meta can do whatever it wants, but this set of decisions is likely to - as Josh said about Trump’s reelection - make the platform dumber and crueler.

> a ridiculous cadre of people operating in a fake domain of expertise

Came for the well thought out punditry, stayed for the spicy (and true) insults. xD